Create an AKS cluster with AGIC and deploy workloads using Helm

In this article, we'll first see how to use Terraform to create the required resources for deploying an Azure Kubernetes Service (AKS) instance with the Azure Application Gateway Ingress Controller (AGIC) add-on, which utilizes the Azure Application Gateway to expose the selected services to the internet. Then, we'll deploy two Node.js HTTP servers with Helm from public Docker images, where they contact each other using Service DNS Names. You can find the code in https://github.com/aerocov/kubernetes-examples.

Table of Contents

- Prerequisites

- Overall Architecture

- Networking

- Create Network Resources

- Create Application Gateway

- Create AKS Cluster

- Create Role Assignments

- Deploy Infrastructure

- Applications Helm Chart

- Deploy Applications

Prerequisites

Before you begin, you'll need to have the following installed:

- Install Azure CLI

- Install Terraform

- Setup Terraform for Azure

- Install Kubectl

- Install Helm

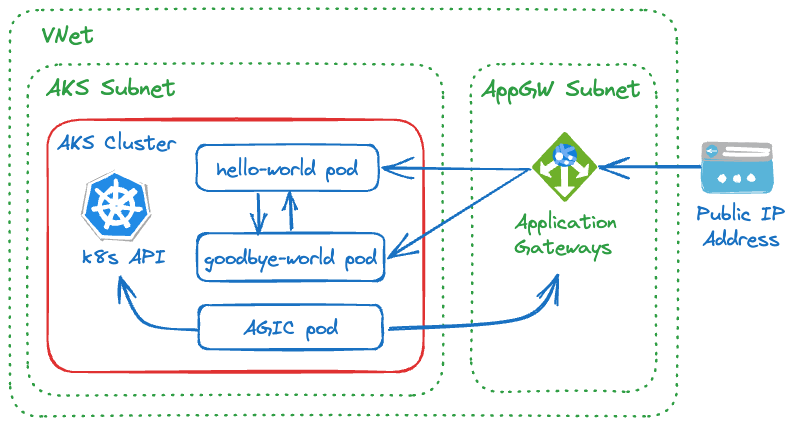

Overall Architecture

The following diagram shows the overall architecture of the solution:

We have two Node.js HTTP server, nodejs-hello-world and nodejs-goodbye-world.

They both have two GET endpoints:

| Service | Endpoint | Description |

|---|---|---|

| GET /hello | Returns Hello World |

GET /hello/call-goodbye | Calls the | |

nodejs-goodbye-world | GET /goodbye | Returns Goodbye World |

GET /goodbye/call-hello | Calls the |

When deployed, we simply access these endpoints through the Application Gateway public IP address. This way we can test the end-to-end flow and connectivity.

The GOODBYE_SERVICE_ENDPOINT and HELLO_SERVICE_ENDPOINT are the environment variables that

we'll pass to the nodejs-hello-world and nodejs-goodbye-world respectively through ConfigMap.

Their values, as we'll see, are the DNS names of the relevant Kubernetes Services we'll deploy.

This allows to test DNS names resolution and inter-service communication.

Networking

We use Azure Container Networking Interface (CNI) networking, where each Pod gets an IP address from the cluster subnet and can be accessed directly, which eliminates the need for NAT devices.

CNI networking is recommended for production workloads due to its superior performance and scalability over the default kubenet networking.

However, it needs extra planning.

The size of the VNet and subnets must be large enough to accommodate the number of Pods and

Nodes you expect to deploy, considering the upgrade process requirements and scaling needs.

There are other variations of CNI networking, addressing scalability and planning challenges,

which you can read about in AKS Networking concepts.

Now, let's do some rough network planning:

-

VNet: We have two main resources to deploy within the VNet, an AKS cluster and an Application Gateway. We can simply use

/16CIDR block, which is the maximum number of IP addresses we can have in a VNet. You should choose your address space based on your network topology and networking needs. We'll use10.2.0.0/16address space for the VNet. -

AKS subnet: We can calculate the the minimum number of IP addresses we need for the cluster by the following formula:

(number of nodes + 1) + ((number of nodes + 1) * maximum pods per node)(number of nodes + 1) + ((number of nodes + 1) * maximum pods per node)Here we assume we don't do horizontal pod scaling and there are only two fixed nodes. The default maximum number of pods per node is 30, so the required address space is:

(2 + 1) + ((2 + 1) * 30) = 93(2 + 1) + ((2 + 1) * 30) = 93We'll use

/24CIDR block, which gives us 256 IP addresses, well above the minimum number of IP addresses we need. We use10.2.1.0/24address space for the AKS subnet. -

Application Gateway subnet: We assume we use two AppGW instances with no autoscaling and no private frontend private IPs. This means that we technically only need two IP addresses. However, it's highly recommended to use a

/24subnet to ensure sufficient space for autoscaling and maintenance upgrades. We use10.2.2.0/24address space for our AppGW subnet. -

Kubernetes Service IP range: This is the range of virtual IPs that Kubernetes assigns to internal services such as DNS service. There are important requirements that must be met for this range:

- Must be smaller than /12

- Must not be within the virtual network IP address range of your cluster

- Must not overlap with any other virtual networks with which the cluster virtual network peers

- Must not overlap with any on-premises IPs

- Must not be within the ranges 169.254.0.0/16, 172.30.0.0/16, 172.31.0.0/16, or 192.0.2.0/24

To meet the above requirement, we'll use

10.1.0.0/16address space for our Kubernetes Service IP range, which does not overlap with our VNet address space. -

Kubernetes DNS Service IP: This is the IP address of the cluster's DNS service and must be within the Kubernetes Service address range, and not the first address in the range. We'll use

10.1.0.10.

Create Network Resources

First, create a resource group:

resource "azurerm_resource_group" "rg" {

name = var.resource_group_name

location = var.location

}resource "azurerm_resource_group" "rg" {

name = var.resource_group_name

location = var.location

}We use a Terraform input variables file (.tfvars)

for more streamlined process and to allow for multi-environment deployments.

Based on the above design, we'll need to create a VNet with two subnets, one for AKS and one for AppGW:

resource "azurerm_virtual_network" "aksdemo" {

name = var.vnet_name

address_space = ["10.2.0.0/16"]

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

}

resource "azurerm_subnet" "aks" {

name = "${azurerm_virtual_network.aksdemo.name}-aks-subnet"

resource_group_name = azurerm_resource_group.rg.name

virtual_network_name = azurerm_virtual_network.aksdemo.name

address_prefixes = ["10.2.1.0/24"]

}

resource "azurerm_subnet" "appgw" {

name = "${azurerm_virtual_network.aksdemo.name}-appgw-subnet"

resource_group_name = azurerm_resource_group.rg.name

virtual_network_name = azurerm_virtual_network.aksdemo.name

address_prefixes = ["10.2.2.0/24"]

}resource "azurerm_virtual_network" "aksdemo" {

name = var.vnet_name

address_space = ["10.2.0.0/16"]

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

}

resource "azurerm_subnet" "aks" {

name = "${azurerm_virtual_network.aksdemo.name}-aks-subnet"

resource_group_name = azurerm_resource_group.rg.name

virtual_network_name = azurerm_virtual_network.aksdemo.name

address_prefixes = ["10.2.1.0/24"]

}

resource "azurerm_subnet" "appgw" {

name = "${azurerm_virtual_network.aksdemo.name}-appgw-subnet"

resource_group_name = azurerm_resource_group.rg.name

virtual_network_name = azurerm_virtual_network.aksdemo.name

address_prefixes = ["10.2.2.0/24"]

}Create Application Gateway

Base on the design we need a public IP address assigned to the Application Gateway frontend:

resource "azurerm_public_ip" "appgw" {

name = var.public_ip_name

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

allocation_method = "Static"

sku = "Standard"

}resource "azurerm_public_ip" "appgw" {

name = var.public_ip_name

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

allocation_method = "Static"

sku = "Standard"

}Then we can create the Application Gateway, and reference the public IP address resource we just created in frontend IP configuration:

resource "azurerm_application_gateway" "aks" {

name = var.appgw_name

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

sku {

name = "Standard_v2"

tier = "Standard_v2"

capacity = 2

}

gateway_ip_configuration {

name = "${var.appgw_name}-ip-configuration"

subnet_id = azurerm_subnet.appgw.id

}

frontend_port {

name = local.frontend_port_name

port = 80

}

frontend_port {

name = "httpsPort"

port = 443

}

frontend_ip_configuration {

name = local.frontend_ip_configuration_name

public_ip_address_id = azurerm_public_ip.appgw.id

}

backend_address_pool {

name = local.backend_address_pool_name

}

backend_http_settings {

name = local.http_setting_name

cookie_based_affinity = "Disabled"

port = 80

protocol = "Http"

request_timeout = 30

}

http_listener {

name = local.http_listener_name

frontend_ip_configuration_name = local.frontend_ip_configuration_name

frontend_port_name = local.frontend_port_name

protocol = "Http"

}

request_routing_rule {

name = local.request_routing_rule_name

rule_type = "Basic"

priority = 100

http_listener_name = local.http_listener_name

backend_address_pool_name = local.backend_address_pool_name

backend_http_settings_name = local.http_setting_name

}

depends_on = [azurerm_virtual_network.aksdemo, azurerm_public_ip.appgw]

}resource "azurerm_application_gateway" "aks" {

name = var.appgw_name

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

sku {

name = "Standard_v2"

tier = "Standard_v2"

capacity = 2

}

gateway_ip_configuration {

name = "${var.appgw_name}-ip-configuration"

subnet_id = azurerm_subnet.appgw.id

}

frontend_port {

name = local.frontend_port_name

port = 80

}

frontend_port {

name = "httpsPort"

port = 443

}

frontend_ip_configuration {

name = local.frontend_ip_configuration_name

public_ip_address_id = azurerm_public_ip.appgw.id

}

backend_address_pool {

name = local.backend_address_pool_name

}

backend_http_settings {

name = local.http_setting_name

cookie_based_affinity = "Disabled"

port = 80

protocol = "Http"

request_timeout = 30

}

http_listener {

name = local.http_listener_name

frontend_ip_configuration_name = local.frontend_ip_configuration_name

frontend_port_name = local.frontend_port_name

protocol = "Http"

}

request_routing_rule {

name = local.request_routing_rule_name

rule_type = "Basic"

priority = 100

http_listener_name = local.http_listener_name

backend_address_pool_name = local.backend_address_pool_name

backend_http_settings_name = local.http_setting_name

}

depends_on = [azurerm_virtual_network.aksdemo, azurerm_public_ip.appgw]

}Create AKS Cluster

Now we can create the AKS instance based on the specifications we discussed earlier:

resource "azurerm_kubernetes_cluster" "aks" {

name = "aks-${var.app_name}"

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

dns_prefix = var.app_name

default_node_pool {

name = "agentpool"

node_count = 2

vm_size = "Standard_D2_v2"

vnet_subnet_id = azurerm_subnet.aks.id

}

identity {

type = "SystemAssigned"

}

# CNI (instead of kubenet)

network_profile {

network_plugin = "azure"

network_policy = "azure"

service_cidr = "10.1.0.0/16"

dns_service_ip = "10.1.0.10"

outbound_type = "loadBalancer"

}

# AGIC

ingress_application_gateway {

gateway_id = azurerm_application_gateway.aks.id

}

depends_on = [azurerm_virtual_network.aksdemo, azurerm_application_gateway.aks]

}resource "azurerm_kubernetes_cluster" "aks" {

name = "aks-${var.app_name}"

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

dns_prefix = var.app_name

default_node_pool {

name = "agentpool"

node_count = 2

vm_size = "Standard_D2_v2"

vnet_subnet_id = azurerm_subnet.aks.id

}

identity {

type = "SystemAssigned"

}

# CNI (instead of kubenet)

network_profile {

network_plugin = "azure"

network_policy = "azure"

service_cidr = "10.1.0.0/16"

dns_service_ip = "10.1.0.10"

outbound_type = "loadBalancer"

}

# AGIC

ingress_application_gateway {

gateway_id = azurerm_application_gateway.aks.id

}

depends_on = [azurerm_virtual_network.aksdemo, azurerm_application_gateway.aks]

}Using the default_node_pool block, we specify the number of nodes and the VM size. We don't use any autoscaling features for this example.

We also specify the VNet subnet ID where the nodes will be deployed to.

AKS cluster needs an identity to access Azure resources. We can use a Service Principle or a Manged Identity. To make it simple, we use a System Assigned Managed Identity.

The network_profile block allows enabling CNI networking by setting network_plugin to azure.

We use Azure Network Policy Manager, azure, for network_policy.

The service_cidr and dns_service_ip are the values we discussed in the Networking section.

With ingress_application_gateway block we can enable the AGIC add-on by specifying the gateway_id of

the Application Gateway resource we created earlier.

The AGIC service constantly monitor the changes in the cluster resources and configure the

Application Gateway using Azure Resource Manager (ARM) to route traffic to the relevant services.

Create Role Assignments

If you try to deploy the AKS cluster now, you'll get some permission errors. We need to assign some roles to AKS, AGIC, and AppGW principals:

# aks > subnet

resource "azurerm_role_assignment" "aks_subnet" {

scope = azurerm_subnet.aks.id

role_definition_name = "Network Contributor"

principal_id = azurerm_kubernetes_cluster.aks.identity[0].principal_id

depends_on = [azurerm_virtual_network.aksdemo]

}

# aks > appgw

resource "azurerm_role_assignment" "aks_appgw" {

scope = azurerm_application_gateway.aks.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.aks.identity[0].principal_id

depends_on = [azurerm_application_gateway.aks]

}

# agic > appgw

resource "azurerm_role_assignment" "agic_appgw" {

scope = azurerm_application_gateway.aks.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.aks.ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.aks]

skip_service_principal_aad_check = true

}

# agic > appgw resource group

resource "azurerm_role_assignment" "agic_appgw_rg" {

scope = azurerm_resource_group.rg.id

role_definition_name = "Reader"

principal_id = azurerm_kubernetes_cluster.aks.ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.aks]

}# aks > subnet

resource "azurerm_role_assignment" "aks_subnet" {

scope = azurerm_subnet.aks.id

role_definition_name = "Network Contributor"

principal_id = azurerm_kubernetes_cluster.aks.identity[0].principal_id

depends_on = [azurerm_virtual_network.aksdemo]

}

# aks > appgw

resource "azurerm_role_assignment" "aks_appgw" {

scope = azurerm_application_gateway.aks.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.aks.identity[0].principal_id

depends_on = [azurerm_application_gateway.aks]

}

# agic > appgw

resource "azurerm_role_assignment" "agic_appgw" {

scope = azurerm_application_gateway.aks.id

role_definition_name = "Contributor"

principal_id = azurerm_kubernetes_cluster.aks.ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.aks]

skip_service_principal_aad_check = true

}

# agic > appgw resource group

resource "azurerm_role_assignment" "agic_appgw_rg" {

scope = azurerm_resource_group.rg.id

role_definition_name = "Reader"

principal_id = azurerm_kubernetes_cluster.aks.ingress_application_gateway[0].ingress_application_gateway_identity[0].object_id

depends_on = [azurerm_application_gateway.aks]

}Deploy Infrastructure

Now we can deploy all the resources using Terraform:

# where all terraform files, including values.tfvars, are located

cd ./terraform

terraform init

terraform validate

terraform apply --var-file=values.tfvars# where all terraform files, including values.tfvars, are located

cd ./terraform

terraform init

terraform validate

terraform apply --var-file=values.tfvarsto destroy the resources:

terraform destroy --var-file=values.tfvarsterraform destroy --var-file=values.tfvarsIf you are looking for a Github workflow to deploy resources using Terraform, you can check out Terraform Deploy reusable workflow.

Applications Helm Chart

To deploy the Node.js workloads, we'll use Helm. Helm is a package manager for Kubernetes, which allows us to define, install, and upgrade Kubernetes applications.

Let's assume we use the default namespace for our workloads.

For each application we create

a Deployment,

a ConfigMap,

a Service, and

an Ingress Kubernetes resources.

The Deployment gives us a way to declaratively run the applications in the cluster.

A Deployment can create and destroy Pods dynamically to match the desired state of our cluster.

This means the Pods IP can change at any time and we can't rely on them to communicate with each other.

A Service is an abstraction that allows exposing groups of Pods over a network. We use ClusterIP type,

which makes each application to be reachable within the cluster.

We then expose our Services using Ingress resources to the outside of the cluster.

We also use a ConfigMap to pass non-sensitive environment variables to our applications.

We create some Helm chart templates for the mentioned resources.

We parameterize these templates based on some values we define in values.yaml file. These values are the specs of each application.

First, we create a values.yaml file based on what we discussed in the Overall Architecture section:

apps:

- name: nodejs-hello-world

image:

repository: aerocov/nodejs-hello-world

pullPolicy: Always

tag: '1.0.0'

service:

type: ClusterIP

port: 80

targetPort: 3000

ingress:

enabled: true

path: /hello

configMap:

GOODBYE_SERVICE_ENDPOINT: http://nodejs-goodbye-world.default.svc.cluster.local:80

- name: nodejs-goodbye-world

image:

repository: aerocov/nodejs-goodbye-world

pullPolicy: Always

tag: '1.0.0'

service:

type: ClusterIP

port: 80

targetPort: 3500

ingress:

enabled: true

path: /goodbye

configMap:

HELLO_SERVICE_ENDPOINT: http://nodejs-hello-world.default.svc.cluster.local:80apps:

- name: nodejs-hello-world

image:

repository: aerocov/nodejs-hello-world

pullPolicy: Always

tag: '1.0.0'

service:

type: ClusterIP

port: 80

targetPort: 3000

ingress:

enabled: true

path: /hello

configMap:

GOODBYE_SERVICE_ENDPOINT: http://nodejs-goodbye-world.default.svc.cluster.local:80

- name: nodejs-goodbye-world

image:

repository: aerocov/nodejs-goodbye-world

pullPolicy: Always

tag: '1.0.0'

service:

type: ClusterIP

port: 80

targetPort: 3500

ingress:

enabled: true

path: /goodbye

configMap:

HELLO_SERVICE_ENDPOINT: http://nodejs-hello-world.default.svc.cluster.local:80We have an apps array, where each item is the specs of an application, nodejs-hello-world and nodejs-goodbye-world.

It includes the image specs, the service specs, the ingress specs, and the configMap.

Let's create the template for ConfigMap that creates simple property-like key-value pairs:

{{- range .Values.apps }}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .name }}-config

data:

{{- range $key, $value := .configMap }}

{{ $key }}: {{ $value | quote | nindent 4 }}

{{- end }}

{{- end }}

{{- range .Values.apps }}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .name }}-config

data:

{{- range $key, $value := .configMap }}

{{ $key }}: {{ $value | quote | nindent 4 }}

{{- end }}

{{- end }}

The Go template syntax is used for the templates. Now we can create the template for the Deployment:

{{- range .Values.apps }}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .name }}

labels:

app: {{ .name }}

spec:

replicas: 1

selector:

matchLabels:

app: {{ .name }}

template:

metadata:

labels:

app: {{ .name }}

annotations:

checksum/config: {{ toJson . | sha256sum }}

spec:

containers:

- name: {{ .name }}

image: "{{ .image.repository }}:{{ .image.tag }}"

imagePullPolicy: {{ .image.pullPolicy }}

ports:

- containerPort: {{ .service.targetPort }}

readinessProbe:

httpGet:

path: /

port: {{ .service.targetPort }}

periodSeconds: 30

timeoutSeconds: 10

envFrom:

- configMapRef:

name: {{ .name }}-config

{{- end }}{{- range .Values.apps }}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .name }}

labels:

app: {{ .name }}

spec:

replicas: 1

selector:

matchLabels:

app: {{ .name }}

template:

metadata:

labels:

app: {{ .name }}

annotations:

checksum/config: {{ toJson . | sha256sum }}

spec:

containers:

- name: {{ .name }}

image: "{{ .image.repository }}:{{ .image.tag }}"

imagePullPolicy: {{ .image.pullPolicy }}

ports:

- containerPort: {{ .service.targetPort }}

readinessProbe:

httpGet:

path: /

port: {{ .service.targetPort }}

periodSeconds: 30

timeoutSeconds: 10

envFrom:

- configMapRef:

name: {{ .name }}-config

{{- end }}We added a checksum/config annotation to the ReplicaSet template, to make sue the changes to the values are detected and result in a new Deployment.

We also added a readinessProbe to allow the ingress controller monitor the services.

To expose the applications to the cluster network, we create the Service template:

{{- range .Values.apps }}

---

apiVersion: v1

kind: Service

metadata:

name: {{ .name }}

labels:

app: {{ .name }}

spec:

type: {{ .service.type }}

ports:

- port: {{ .service.port }}

targetPort: {{ .service.targetPort }}

selector:

app: {{ .name }}

{{- end }}{{- range .Values.apps }}

---

apiVersion: v1

kind: Service

metadata:

name: {{ .name }}

labels:

app: {{ .name }}

spec:

type: {{ .service.type }}

ports:

- port: {{ .service.port }}

targetPort: {{ .service.targetPort }}

selector:

app: {{ .name }}

{{- end }}Finally, we create the Ingress template:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: apps-ingress

annotations:

kubernetes.io/ingress.class: azure/application-gateway

spec:

rules:

{{- range .Values.apps }}

{{- if .ingress.enabled }}

- http:

paths:

- path: {{ .ingress.path }}

pathType: Prefix

backend:

service:

name: {{ .name }}

port:

number: {{ .service.port }}

{{- end }}

{{- end }}apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: apps-ingress

annotations:

kubernetes.io/ingress.class: azure/application-gateway

spec:

rules:

{{- range .Values.apps }}

{{- if .ingress.enabled }}

- http:

paths:

- path: {{ .ingress.path }}

pathType: Prefix

backend:

service:

name: {{ .name }}

port:

number: {{ .service.port }}

{{- end }}

{{- end }}We use the azure/application-gateway annotation to specify the AGIC as the ingress controller.

Deploy Applications

Now that we have the infrastructure and the Helm chart, we can deploy the applications. First update your kube-config:

# add cluster credentials to kube-config

az aks get-credentials --resource-group rg-poc-aksdemo --name aks-poc-aksdemo# add cluster credentials to kube-config

az aks get-credentials --resource-group rg-poc-aksdemo --name aks-poc-aksdemoThen deploy the applications using Helm:

# in ./aks-agic-dns where the `app` folder is located

helm install aksdemo app# in ./aks-agic-dns where the `app` folder is located

helm install aksdemo appAfter a short period of time you should be able to see the applications running:

kubectl get podskubectl get podsTo verify the end-to-end flow, using any HTTP client:

- Grab the public IP of the ingress controller, which is the Application Gateway public IP address you can see in the Terraform output.

- GET

http://<public-ip>/hello/, this will returnHello Worldfrom thenodejs-hello-worldservice - GET

http://<public-ip>/goodbye/, this will returnGoodbye Worldfrom thenodejs-goodbye-worldservice - GET

http://<public-ip>/hello/call-goodbye, this will call thenodejs-goodbye-worldservice from thenodejs-hello-worldservice by its DNS name and return the result - GET

http://<public-ip>/goodbye/call-hello, this will call thenodejs-hello-worldservice from thenodejs-goodbye-worldservice by its DNS name and return the result